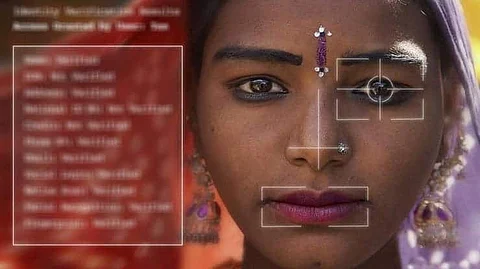

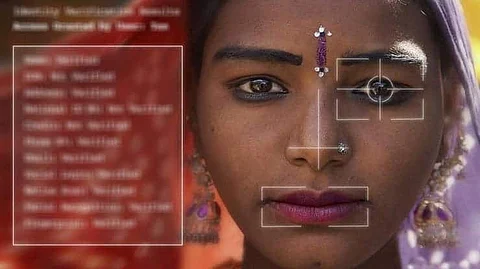

Facial recognition technology has penetrated almost every market. From surveillance cameras to unlock features in smartphones, this artificial intelligence discipline has become a key part of our daily lives. Some months ago, the startup FDNA created the DeepGestalt algorithm to identify genetic disorders from facial images. While this technology has proved resourceful, not everyone seems to be on board with it. People are questioning its access and collection of unlimited databases connecting names and faces and are also accusing it of privacy invasion. Further, the recent outrage due to the merciless death of George Floyd sparked debates about the existing bias in facial recognition technology.

The COVID-19 outbreak acted as a disruptor for several digital technologies. This also includes AI-powered computer vision, whose primary application is facial recognition. Numerous surveillance cameras and sensors were installed at many cities around the world to scan for possible positive cases, check if people were following social distancing protocols, and more. However, it was a Faustian bargain as it involved the exchange of privacy for safety and health. Though currently, it seems a necessary action, questions are about the future of these devices after the pandemic fizzles. One cannot rule out the possibility of facial recognition tools to be misused by government bodies for inhuman, freedom invoking purposes. For instance, it is a widely reported fact that the Chinese government is leveraging this technology to target the Uighur Muslims in Xinjiang province. Further, the recent white paper on AI regulation by the European Union failed to include or mention any references to facial recognition, much to the shock of people. Hence, fear perpetuates among the common public of being watched every time, synonymous with George Orwell's mentioning of a dystopian world in his book '1984' where the big brother is watching every inhabitant all the time.

Facial recognition software are also notorious for racial and gender bias. In February, the BBC wrongly labeled black MP Marsha de Cordova as her colleague Dawn Butler. In June, Microsoft's AI editor software had attached an image of Leigh-Anne Pinnock to an article headlined "Little Mix star Jade Thirlwall says she faced horrific racism at school." So, misidentification and the harassment of minorities due to AI is a common problem these days. A 2018 MIT study of three commercial gender-recognition systems found they had error rates of up to 34% for dark-skinned women, which is nearly 49 times that for white men.

Early this year about the #BlackLivesMatter protest, IBM CEO Arvind Krishna had informed the US Congress through a letter that it shall no longer be offering its facial recognition or analysis software and firmly opposes technology that is used for mass surveillance, racial profiling, and violations of fundamental human rights and freedoms.

Following this, two days later, Amazon announced in a blog post that it would stop selling its software, Rekognition, to law enforcement for a year with the hope Congress to pass stronger regulation around it. Then Microsoft resident Brad Smith declared to limit the use of its facial-recognition systems in a remote interview with the Washington Post Live event. However, this is not enough cause neither of these Big Techs takes any precautionary measures to prevent racial biasing beforehand. Also, in case law enforcement departments must use facial recognition technology, it is necessary to have a robust regulatory framework. Government action will also be necessary to encourage the adoption of training diverse data audit practices. Lack of any legal framework can lead to abuse, like obtaining images without personal knowledge or consent and using them in ways that would not be approved of. Also, blanketed surveillance can invade with citizens' right to political consent. Or worse, another incident of US Immigration and Customs Enforcement (ICE) misusing Palantir to watch and track immigrants. So, it can also be misused for stalking too.

Further, wrong facial analysis can be catastrophic too. E.g. if a person is misidentified by Apple iPhone and given access grant to your phone, he may misuse it for malicious purposes. Another facial identification tool last year wrongly flagged a Brown University student as a suspect in Sri Lanka bombings, and the student went on to receive death threats. Hence it was really encouraging when the Californian cities of San Francisco and Oakland and the Massachusetts city Somerville instituted bans on facial recognition software by public agencies.

According to a research report "Facial Recognition Market" by Component, the facial recognition industry is expected to grow from US$3.2 billion in 2019 to US$7.0 billion by 2024 in the US. Yet the controversies and concerns regarding facial recognition need to be addressed as soon as possible to instill trust among the public.

Join our WhatsApp Channel to get the latest news, exclusives and videos on WhatsApp

_____________

Disclaimer: Analytics Insight does not provide financial advice or guidance. Also note that the cryptocurrencies mentioned/listed on the website could potentially be scams, i.e. designed to induce you to invest financial resources that may be lost forever and not be recoverable once investments are made. You are responsible for conducting your own research (DYOR) before making any investments. Read more here.