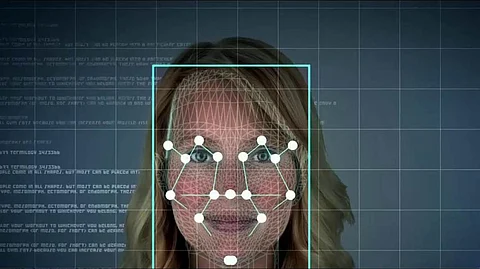

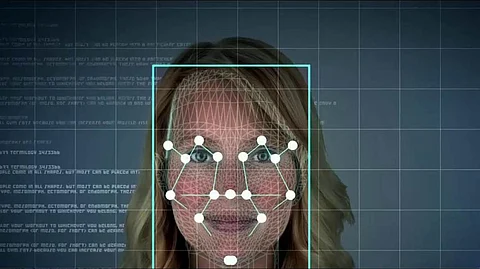

Deepfake in today's fast-moving digital world is multiplying quickly. This is a media form, taking a person in an existing image, audio recording, or video and resembling someone else's likeness using artificial intelligence. The adoption of deepfakes is increasing rapidly and it makes it easy to spread fake news or rumors in the market. It is spreading at an astounding speed.

Thus, to tackle with misguided information and to stop deepfake, last month, video sharing company YouTube issued its policies for handling disinformation. In order to confiscate manipulated videos, the Google-owned company said it will remove content that has been technically manipulated or doctored in order to mislead users.

Last year, YouTube took down a deepfake video of Speaker of the United States House of Representatives Nancy Pelosi. Reportedly, the video was manipulated to make her appear intoxicated. Moreover, YouTube will also terminate channels that try to mime another person or channel, or increase the number of views, likes, and comments on a video artificially.

Social networking giant Facebook, which faced criticism last year for denying to remove the unflattering Pelosi deepfake, announced a deepfake ban in January. On the other hand, Twitter also released its new rule to counter disinformation just a day later YouTube issued policies. The new rule from Twitter is said become effective by March 5. The company will counter misguided content, especially altered photos and videos.

Along with banning outrageous offenders, Twitter will label some tweets as manipulated media and link to a Twitter Moment that provides more context. The company's new policy comes out amid growing concerns that deepfakes and other manipulated media could impact the 2020 election and beyond.

In a statement, Twitter's Vice President of trust and safety, Del Harvey said that "Part of our job is to closely monitor all sorts of emerging issues and behaviors to protect people on Twitter." He further noted that "Our goal was really to provide people with more context around certain types of media they come across on Twitter and to ensure they're able to make informed decisions around what they're seeing."

It is said that when Twitter finds media that is altered, it will take a series of actions by labeling the tweets, reducing its visibility by removing it from algorithmic recommendation engines, providing additional context, and showing a warning before people retweet it. Moreover, those videos and other media that are potentially capable of causing impairment will also be removed.

Apart from these tech companies move, alliances of academic institutions, non-profits and others are also creating techniques to detect misleading AI-generated media or content over diverse channels. In this context, a team from the University of California, Berkeley and the University of Southern California, last year, trained a model that looks for precise facial action units. These data points of people's facial movements, tics, and expressions, including when they raise their upper lips and how their heads rotate when they frown, could spot manipulated videos with greater than 90 percent accuracy.

Likewise, in August 2018, members of the Media Forensics program at the US Defense Advanced Research Projects Agency (DARPA) experimented systems to identify AI-generated videos from indications like unnatural blinking, strange head movements, odd eye color, and more.

Join our WhatsApp Channel to get the latest news, exclusives and videos on WhatsApp

_____________

Disclaimer: Analytics Insight does not provide financial advice or guidance. Also note that the cryptocurrencies mentioned/listed on the website could potentially be scams, i.e. designed to induce you to invest financial resources that may be lost forever and not be recoverable once investments are made. You are responsible for conducting your own research (DYOR) before making any investments. Read more here.