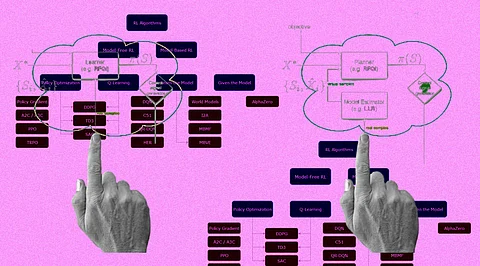

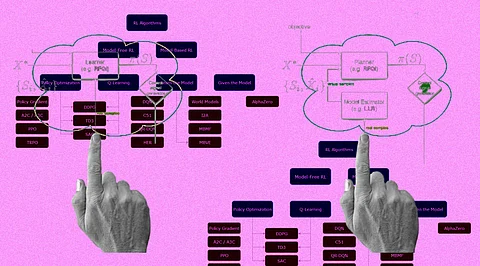

Reinforcement learning is one of the common terms in developing an AI model with machine learning algorithms. It is known to the whole global tech market. But there are two categories used in the process of making reliable decisions through an AI model. Reinforcement learning tends to apply a method of learning through interaction and feedback. The two categories are called model-based reinforcement learning and model-free reinforcement learning. AI model learning is based on neural networks and machine learning algorithms to achieve a complicated objective. Let's dig deep into the concepts of model-based reinforcement learning and model-free reinforcement learning to have a stronger understanding of the AI model with machine learning algorithms.

First of all, the simplest explanation of model-based reinforcement learning is that it consists of a model of the environment. The model-based reinforcement learning helps to define the probability of ending up in the state t+1 with the current state and action. It is also known as transition probability with the explicit function to provide the next state efficiently.

AI models which are called known models are provided to the agent without any need to go through a training process. Meanwhile, learned models are not being provided to the agent of the AI model. The agent should learn the model, represented by a neural network, with sufficient and relevant experience. The neural network must learn to provide efficient guesses for the next state. The model-based reinforcement learning consists of an explicit model that needs fewer samples but there can be a probability of being inaccurate.

The reinforcement learning can qualify as the model-based if the machine learning algorithms can explicitly refer to the AI model. The archetypical model-based algorithms are known as dynamic programming (policy iteration and value iteration). It uses the predictions as well as distribution of the AI model and rewards to evaluate the optimal actions. It is necessary to offer state transition probabilities and expect the reward from any state and action pair.

The neural network is often employed to learn as well as generalize value functions. These trained neural networks are known as models in supervised learning. One of the important advantages of this AI model is that it brings the need for the agent to undergo trial-and-error in its environment. It is can be a time-consuming process that can be dangerous in time-sensitive situations. It has been a successful category after the development of artificial intelligence systems that tends to be professional in board games where the environment seems to be deterministic.

But one should not get tense if there is a lack of presence of an accurate model as a part of the problem definition. One can try out model-free reinforcement learning which is often known to be superior to model-based reinforcement learning.

Yet again, in simple terms, model-free reinforcement learning does not need a model for the environment. It helps to learn a value function or a policy in a direct manner. The model-free reinforcement learning tends to identify situations in which it is a suitable solution for an MDP (Markov Decision Process). It just learns by trying multiple different behaviors and observing different kinds of rewards to receive. Positive rewards motivate the AI model to reinforce the policy to put that behavior on a regular basis, while negative rewards reinforce the policy to not put up with that behavior.

Model-free reinforcement learning is known for providing methods that are trial-and-error learners. The response from the environment to local actions should not be considered. It offers some key benefits such as it is more efficient than solving complicated bottleneck issues in the model-based reinforcement learning category. It plays a key role in building blocks for the other category while depending on the stored values for state-action pairs.

The agent needs to collect new experiences in the modified environment when the environment of an agent changes the way it reacts to the necessary actions. These are applicable in multiple environments for instant reactions to a completely new state. The model-free reinforcement learning is known for an agent perceiving the environment, taking a relevant action, and measuring the necessary reward— positive or negative. There is no direct knowledge or model of the environment — every outcome is the output of a trial-and-error method. It is known for seeking to learn the consequences of actions with experience through machine learning algorithms like Q-Learning.

Join our WhatsApp Channel to get the latest news, exclusives and videos on WhatsApp

_____________

Disclaimer: Analytics Insight does not provide financial advice or guidance. Also note that the cryptocurrencies mentioned/listed on the website could potentially be scams, i.e. designed to induce you to invest financial resources that may be lost forever and not be recoverable once investments are made. You are responsible for conducting your own research (DYOR) before making any investments. Read more here.