Dell's server infrastructure typically powers a wide range of applications in the customer data center. With the advent of the cloud, some of these applications are moving into cloud-based infrastructure and it is important for us to know the kind of workloads moving into the cloud and the ones staying within the data center. Sales teams at Dell have sparse visibility into how customers are leveraging our server infrastructure and rely on customers reaching out to us for help to begin server upgrade/refresh conversations. Future innovation in server and infrastructure products depends largely on visibility into current usage at Customer Data Centers. In this scenario, the ability to classify workloads reliably from applications captured in server logs or free format text from Dell's internal CRM systems is the key to these efforts and hence the need for an advanced NLP-based workload classification algorithm.

Knowledge of enterprise infrastructure workloads is a critical need for Dell to succeed in the market. Current methods to classify workloads fell short when it came to going beyond just keyword searches. It's important that our product management, sales, and engineering teams are equipped with the right information with a high level of precision about workloads in customer environments to plan the future roadmaps to product design, development, management, and sales. It's also a key insight for business leaders to focus on the right investment areas and plan the right strategic trajectories for Dell's growth.

An effort toward leveraging unstructured server logs beyond just system performance metrics was missing in the literature we reviewed and realized that such a capability would add tremendous value to Dell.

Enterprise infrastructure workload classification is not a new subject as such. Within and outside Dell there is a lot of effort that has gone into workload classification through various methods such as keyword searches, performance metric based, etc. However, the challenge has always been about coverage of a large collection of infrastructure devices and a reduction of the amount of white space left by past algorithms due to lack of data. These shortcomings often lead to a situation where decisions get driven by poor quality data and underrepresentation of the true story in the market.

With unstructured server logs capturing information about applications and the impact of applications on server performance, the development of a stronger algorithm was possible. The aim is to both leverage unstructured and structured data coming from server logs/CRM systems to improve the classification of infrastructure workloads.

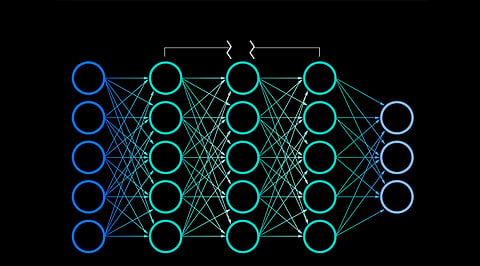

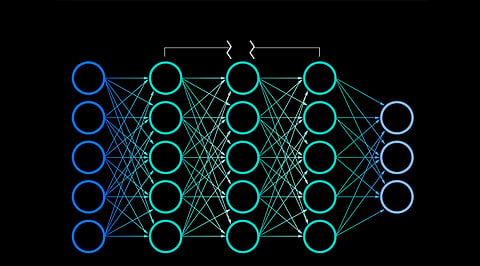

We propose a solution to these challenges by deploying a neural network-based NLP (natural language processing) technique that can identify the type of workload running on the server. The neural network model is trained on the internal CRM data and Wikipedia/any other similar external knowledge source. It can predict the type of workload from either a) the free format text description in CRM or b) application name from product logs. It does so by using the concept of "workload signatures"

Our approach accepts two kinds of input either a) Opportunity description text in CRM or b) App name from product logs. It then feeds the input to a neural network model to predict the kind of workload running on the server. It does so by using neural network representation of workload signatures (a set of words that can be added or subtracted in vector space to represent a workload). The advantage of workload signatures is that they are very intuitive to understand and can be used to improve the accuracy of output very easily in the future.

Here are the steps involved in creating the tool

https://www.quora.com/in/What-is-doc2vec

2. Define workload signatures: A workload signature is the neural network representation of a set of keywords that define a particular kind of workload. The neural network representation can be created by adding or subtracting the vector representation of individual words.

What is an example of a workload signature from daily life?

When we add the neural network representation of the words "American" with a representation of the word "Pop" we get a vector that is very close to the vector representation of famous singer "Lady Gaga"!

Similarly,

Client virtualization can be defined by adding following words in the vector space "client" + "virtualization" + "software application" (The vector representation of "client virtualization" is very close to sum of vector representation of (client, virtualization , software application ) etc

How are workload signatures created?

Advantage of signature

In case we want to define a negative word all we have to do is to subtract that word from signature

E.g. to find a Japanese pop artist like Lady gaga we can do the following vector math

vector ("Lady Gaga") + vector("Japanese") – vector ("American") = ("Ayumi Hamasaki")

Similarly Suppose in Collaborative software workload I don't want to include skype then I can define the workload signature as follows

signature(Collaborative software) = vector("Collaboration") + vector("communicate") – vector("skype")

3. Identify the input data to be labeled using a machine learning algo: Following two cases arise

1. Input is a CRM free format text

a. Let j be count of total types of workload

b. Convert each workload signature to vector sum or subtraction of its constituent words. Call this wl[j]

c. For each workload type j calculate the similarity between wl[j] and the vector representation of crm_text (this is calculated by inferring the nearest vector to the text) Call it similarity[j]

d. Mathematically similarity[j] = 1 – spatial.distance.cosine(wl[j], model.infer_vector(crm_text.split(),steps=x,alpha=y))

e. x and y can be learnt iteratively to get the best accuracy

f. Find the similarity[j] which is highest in value.

g. Assign the value of jth workload (calculated in f) as the predicted workload

2. Input is an app name from product logs

a. Let j be count of total types of workload

b. Convert each workload signature to vector sum or subtraction of its constituent words. Call this wl[j]

c. For each workload type j calculate the similarity between wl[j] and the vector representation of app (this is calculated by inferring the nearest vector to the app name) Call it similarity[j]

d. Mathematically similarity[j] = 1 – spatial.distance.cosine(wl[j], model.infer_vector(app.split(),steps=x,alpha=y))

e. x and y can be learnt iteratively to get best accuracy

f. Find the similarity[j] which is highest in value.

g. Assign the value of jth workload (calculated in f) as the predicted workload

4. Redefine the workload signatures

A very useful feature of the workload signature technique is that the output accuracy can be improved easily by making simple changes to signature words.

Eg. in the future if the business does not want to treat "SQL" as a "Data management" kind of workload then we can just subtract the vector representation of "SQL" from the signature definition of "data management"

Software/Tools Used

We used the following modules in Python for developing the solution

Gensim – For creating the doc2vec model

Beautiful Soup – For extracting data from Wikipedia and parsing XML data

Results/Findings

The method was developed and tested on 3 separate data sources – CRM solution text, server logs, and Support Tool logs. We achieved an accuracy of > 80% with the periodic refinement of workload signatures improving the numbers further over time. We were able to leverage the output with respect to system performance metrics such as CPU utilization, storage/memory consumption, IO throughput, etc to come up with recommendations for specific workload needs of customers directly impacting sales teams. The results were delivered through our internal system for sales enablement which was transformed into a workload-based customer proposal development.

Summary/Conclusion

The methodology opened a way of engagement with customers driving workload-based selling of infrastructure which aligns us very close to the customer's needs. The data sources are continuously refreshed both with new data and refined input from data centers improving the algorithm in the long run. It has also paved the way for new thinking around leveraging other data sources such as orders data to classify workloads with this algorithm acting as a validation layer with live data from customers' data centers.

References

Join our WhatsApp Channel to get the latest news, exclusives and videos on WhatsApp

_____________

Disclaimer: Analytics Insight does not provide financial advice or guidance. Also note that the cryptocurrencies mentioned/listed on the website could potentially be scams, i.e. designed to induce you to invest financial resources that may be lost forever and not be recoverable once investments are made. You are responsible for conducting your own research (DYOR) before making any investments. Read more here.