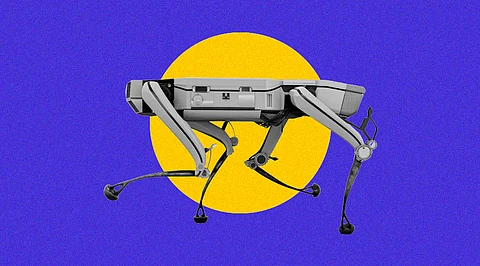

A legged robotic system is a type of robot that has legs instead of wheels or tracks for locomotion. These types of robots are especially useful for navigation in challenging terrain or environments where wheels and tracks cannot easily maneuver. Additionally, legged robotic systems can also have greater mobility and dexterity, allowing them to traverse uneven surfaces or climb stairs. The bot's in-game dribbling system is excellent even though it doesn't match Lionel Messi's level of skill. A legged robotic system that can dribble a soccer ball under identical conditions to humans has been created by researchers from the Computer Science and Artificial Intelligence Laboratory (CSAIL) and MIT's Improbable Artificial Intelligence Lab. The bot navigated several natural terrains, including sand, gravel, mud, and snow, and adjusted to their differing effects on the motion of the ball using a combination of onboard sensing and computing. "DribbleBot" was a dedicated athlete who could get back up after falling and recover the ball. It has long been a focus of study to create soccer-playing robots. To enable the discovery of difficult-to-script skills for responding to a variety of terrains like snow, gravel, sand, grass, and pavement, the team instead sought to automatically learn how to actuate the legs during dribbling. Here comes simulation.

A digital twin of the real world, the simulation includes a robot, a ball, and a landscape. The forward simulation of the dynamics is handled from there after you load in the bot and other assets and set the physics parameters. The robot is capable of simulating 4,000 different versions of itself concurrently in real-time, making data collecting 4,000 times quicker than with just one robot. It's a lot of information. The robot begins by not knowing how to dribble the ball; instead, it is only given positive feedback when it succeeds and negative reinforcement when it fails. It is so essentially attempting to determine the order in which its legs should exert forces. According to MIT Ph.D. student Gabe Margolis, who co-led the work with Yandong Ji, research assistant at the Improbable AI Lab, "one facet of this reinforcement learning approach is that we must develop a nice reward to enable the robot learning a successful dribbling habit." "After we've created that reward, the robot can start practicing: It takes a few days in real time and hundreds of days in the simulator. It learns to manipulate the soccer ball to meet the desired velocity over time, getting better and better at it. The team's recovery controller was incorporated into the bot's system, which allowed it to recover from falls and explore unexpected terrain. The robot can overcome out-of-distribution interruptions and terrains by using this controller to get back up after falling and switch back to its dribbling controller to continue pursuing the ball.

DribbleBot's mobility and the types of terrain it may travel across are more limited when dribbling a soccer ball than when it is walking by itself. The robot's movement must be modified so that it can exert force on the ball while dribbling. The way a ball interacts with a certain environment-such as dense grass or pavement-could be different from how a robot interacts with the same environment. A soccer ball, for instance, will encounter a drag force on grass that is absent on the tarmac, and an incline will impart an acceleration force, altering the ball's usual course. The soccer test can be sensitive to changes in topography because the bot's ability to navigate various terrains is frequently less affected by these variances in dynamics-as long as it doesn't slip. The robot's hardware includes a variety of sensors that let it comprehend its surroundings. These sensors enable the robot to feel its location, "understand" its position, and "see" parts of its surroundings. It is equipped with a collection of actuators that enable it to exert forces on itself and objects. The computer, or "brain," is positioned between the sensors and actuators and is in charge of translating the sensor data into the actions that will be carried out by the motors. Although the robot cannot see the snow when it is moving on it, it can feel it thanks to its motor sensors. Nevertheless, playing soccer is more difficult than walking, so the researchers used cameras on the robot's head and body for a new sensory mode of vision, in addition to the new walking robot.

While some terrains proved difficult for DribbleBot, there is still a long way to go before these robots are as dexterous as their counterparts in nature. The researchers are also eager to apply the lessons discovered while creating DribbleBot to other tasks that require coordinated movements and item manipulation, such as swiftly moving a variety of things from one location to another using the arms or legs.

Join our WhatsApp Channel to get the latest news, exclusives and videos on WhatsApp

_____________

Disclaimer: Analytics Insight does not provide financial advice or guidance. Also note that the cryptocurrencies mentioned/listed on the website could potentially be scams, i.e. designed to induce you to invest financial resources that may be lost forever and not be recoverable once investments are made. You are responsible for conducting your own research (DYOR) before making any investments. Read more here.