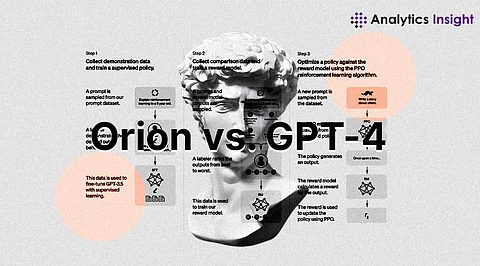

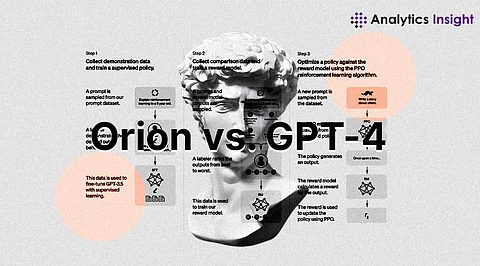

In the rapidly evolving landscape of artificial intelligence, two prominent language models have captured the attention of tech enthusiasts and industry professionals alike, Orion by Anthropic and GPT-4 by OpenAI. As these state-of-the-art AI systems further improve natural language processing people are interested in what separates them.

Although both Orion and GPT-4 are based on transformer architecture, they are different in terms of their design. GPT-4 is the upgraded version of GPT-3 and has gained higher scales and training techniques compared to GPT-3. Orion, on the other hand, incorporates Anthropic's proprietary constitutional AI principles, which aim to create more ethical and controllable AI systems.

Orion's constitutional AI approach introduces novel training methods that prioritize safety and alignment with human values. This sets it apart from GPT-4, which focuses more on raw performance and versatility.

The most significant difference between the two models is the difference in the training data as well as the knowledge cut-off dates. More to the point, GPT-4 has been trained based on data which goes up to September 2021, so it does not contain information about events that occurred afterwards. Orion, however, appears to have a later knowledge cut-off, making it possible to use in the analysis of more contemporary events.

The more recent knowledge base gives Orion an edge in discussions about current events and emerging trends. However, GPT-4's broader training dataset may provide it with a more comprehensive understanding of historical contexts.

Currently, GPT-4 appears to be highly versatile, integrating the generation and processing of images as well as text. This makes it possible for it to assess imagery inputs and give textual outputs including descriptions or even answers concerning picture content. For the moment it is only possible to assume that Orion is mostly designed to handle text-based communication.

The textual and the visual capabilities combined with the vision system make GPT-4 relevant for such tasks as image recognition, and answering questions based on these images. Orion's text-centric approach, while potentially limiting in some scenarios, may allow for more focused and refined language processing.

Anthropic and OpenAI have been heavily focused on the issues of ethics and equal treatment of all people in their AI systems. On the other hand, the constitutional AI framework followed at Orion is more proactive in finding ways of incorporating the ethical considerations right within the training of the model.

Orion's constitutional AI aims to create a more controlled and predictable AI system, potentially reducing unexpected behaviours or outputs. GPT-4, while also designed with safety in mind, may require more external guardrails to ensure ethical use.

When it comes to raw performance, both Orion and GPT-4 have demonstrated remarkable capabilities in various language tasks. However, early reports suggest that Orion may have an edge in terms of efficiency, requiring less computational power to achieve similar results.

GPT-4 offer extensive options for fine-tuning and customization, allowing developers to adapt the model for specific use cases or domains. Orion's customization capabilities are less well-documented, but Anthropic has hinted at plans to provide similar flexibility in the future.

In the future as the AI architecture advances, both Orion and GPT-4 are new highly developed language models. Although, GPT-4 offers rich multimodal understanding, and a broad-spectrum repository of data, Orion is centred around the constitutional AI and heightened efficacy. The choice between these two models will likely depend on specific use cases, ethical considerations, and resource availability.

From the trends in AI R&D through the upcoming conflict between different directions, it will be evident that the contest stimulates the growth of NLP capabilities. If Orion or GPT-4 is to become the favoured model, the main winners are the clients and industries that utilize these incredible AI technologies.